Building a VMware PhotonOS based Kubernetes Appliance for vSphere

Why a Kubernetes-Appliance?

Learning Kubernetes (K8s) is still in great demand and is by no means decreasing. Starting with the theory is essential but we all know, the fun part starts with pactice. Quickly instantiating a Kubernetes cluster is made easy and I’m pretty sure it won’t take long until KinD crosses your path. But sometimes, a local K8s instance isn’t enough and practicing Kubernetes low-level implementations like e.g. for networking (CNI) or storage (CSI) on real cloud environments is crucial.

If you are with vSphere like me, you surely appreciate the simplicity of the VMware Open Virtualization Appliance (OVA) format. Not only the majority of VMware solutions are provided in this format, it’s also pretty popular to build and provide VMware Flings in this format too.

Like the VMware open-source project VMware Event Broker Appliance - VEBA for instance.

Thus, why not developing an easy deployable Kubernetes-Appliance which you can quickly deploy on vSphere for e.g. testing, development or learning purposes.

Kubernetes-Appliance Project on Github

As being part of the VMware internal team around the VEBA project, I had the early opportunity to dive deeper into the build process of such an appliance, which was greatly invented by William Lam and Michael Gasch. So, I took the code basis of the v0.6.0 release, stripped it down to it’s essential pieces, adjusted it accordingly and added new code to it, so it met my requirements.

All details on how-to build the appliance by your own as well as a download link are available on the project homepage on Github.

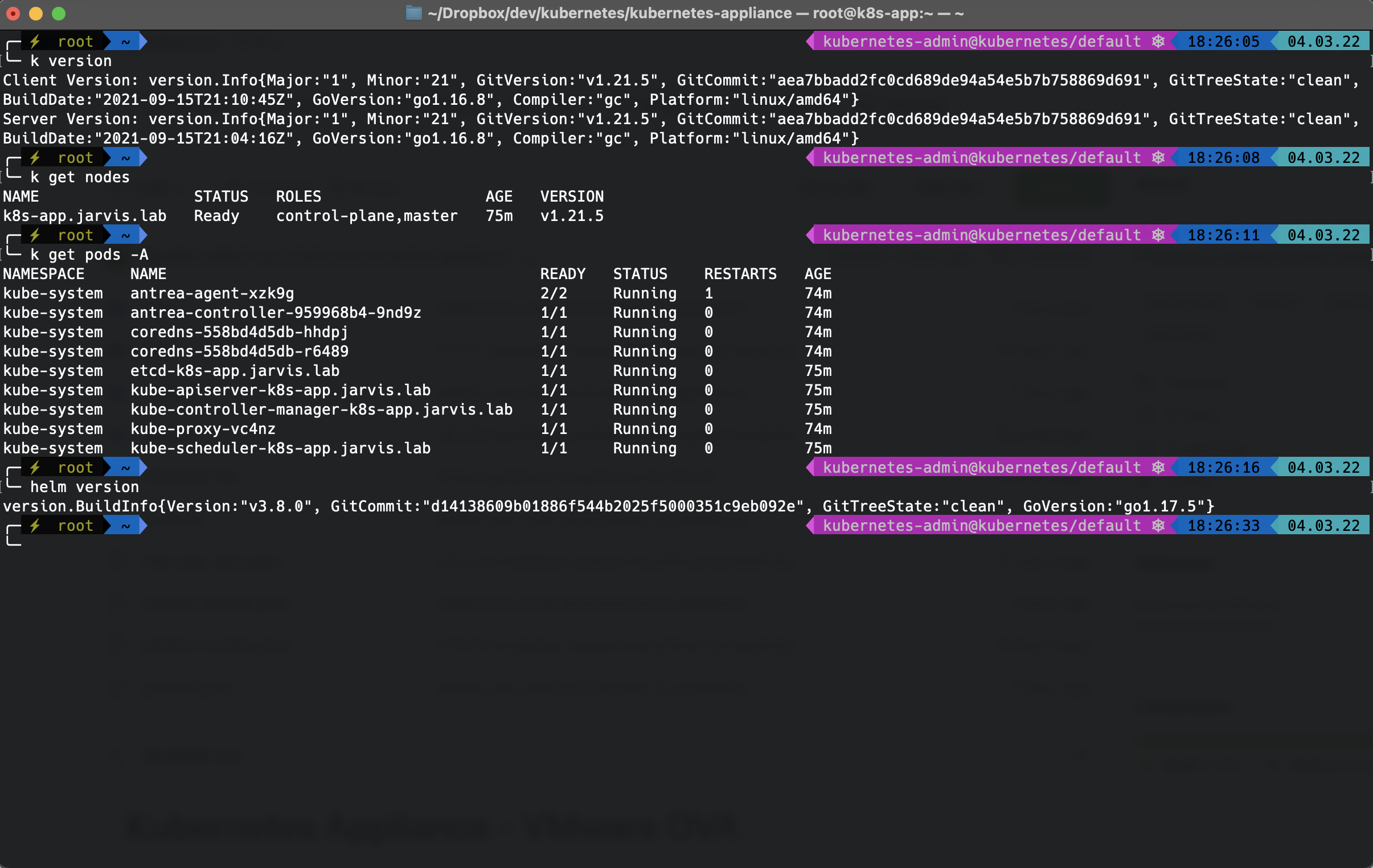

Kubernetes-Appliance Core Components

The appliance is build on the following core components:

| Operating System | CNI | CSI |

|---|---|---|

| VMware PhotonOS | Antrea | rancher/local-path-provisioner |

| Open-source minimalist Linux operating system that is optimized for cloud computing platforms, VMware vSphere deployments, and applications native to the cloud. | A Kubernetes-native project that implements the Container Network Interface (CNI) and Kubernetes NetworkPolicy thereby providing network connectivity and security for pod workloads. | Provides a way for the Kubernetes users to utilize the local storage in each node. |

Additional Installations/Goodies

When you are getting more and more into Kubernetes, and your time on the shell is increasing heavily, it’s a pretty good idea to start enhancing your shell experience by making use of additional CLI’s, tools, shell extensions as well as themes to make your lives easier and more colorful. Therefore, after deploying the appliance and connecting to it via ssh, you will get to use:

Details on the ZSH configuration here: setup-05-shell.sh

Deployment Options

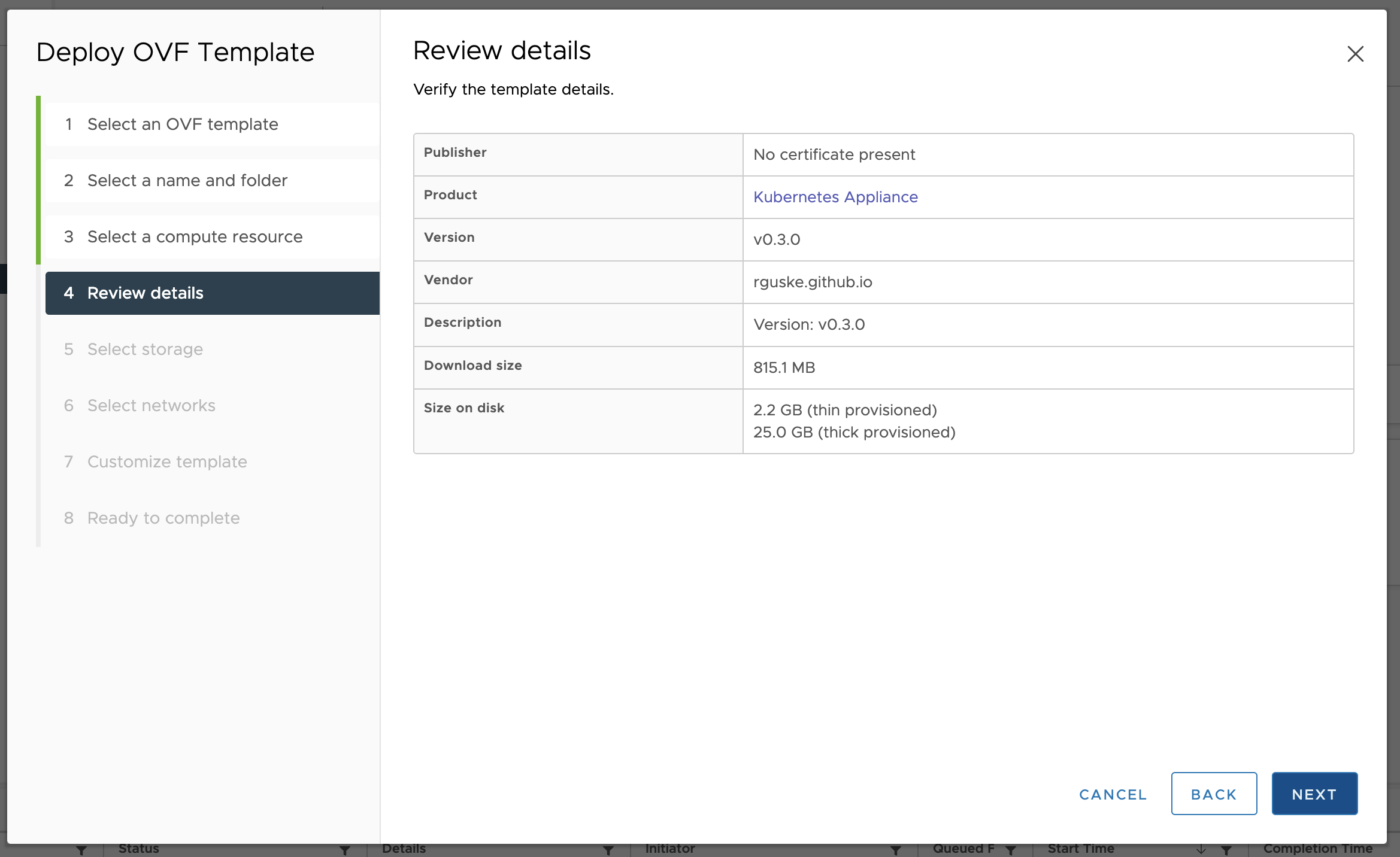

The following options will be available when deploying the appliance onto vSphere.

- Review Details

Compare the displayed version against the release-versions on Github to ensure running latest.

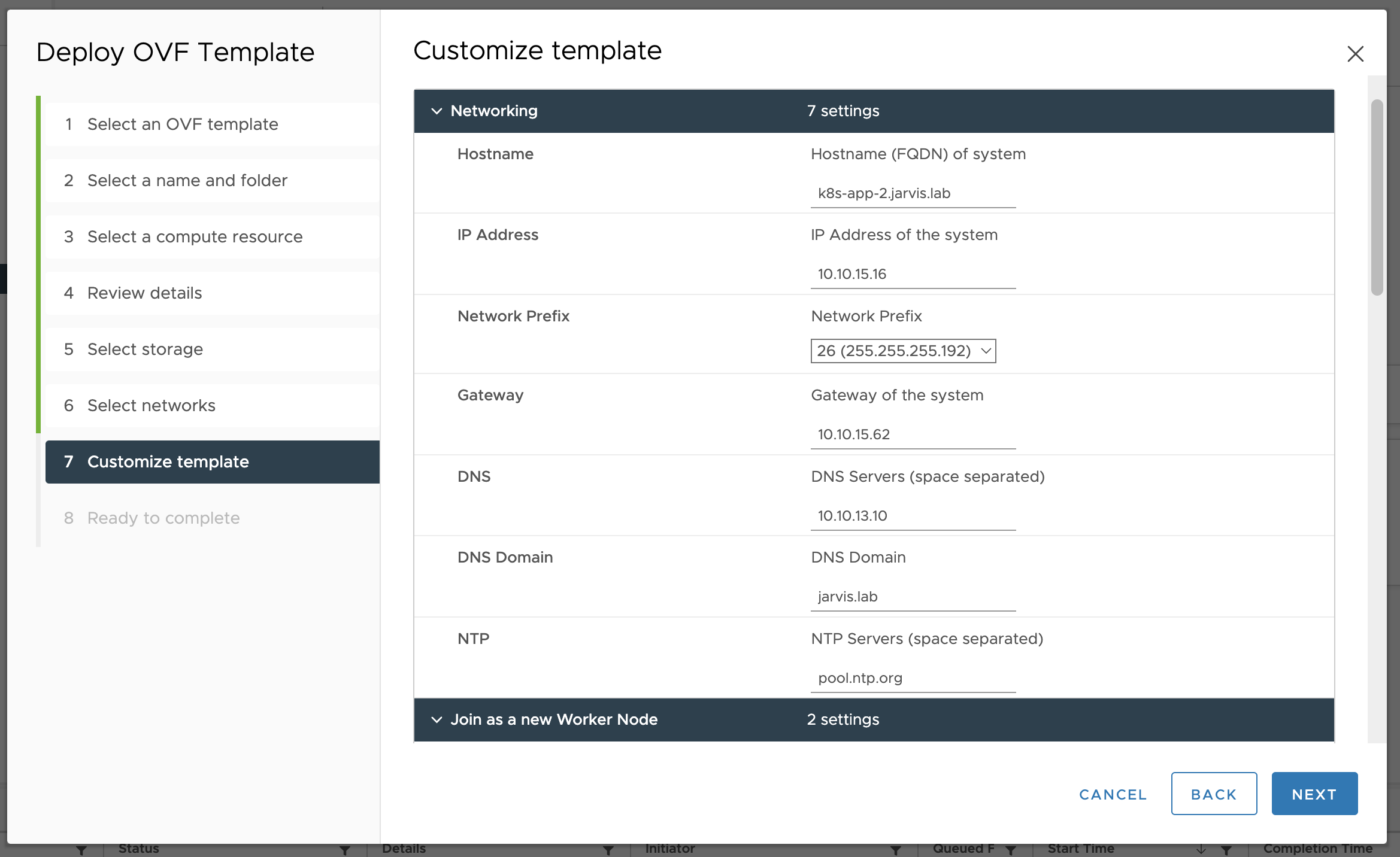

- Networking

Enter the necessary data for the appliance networking configuration.

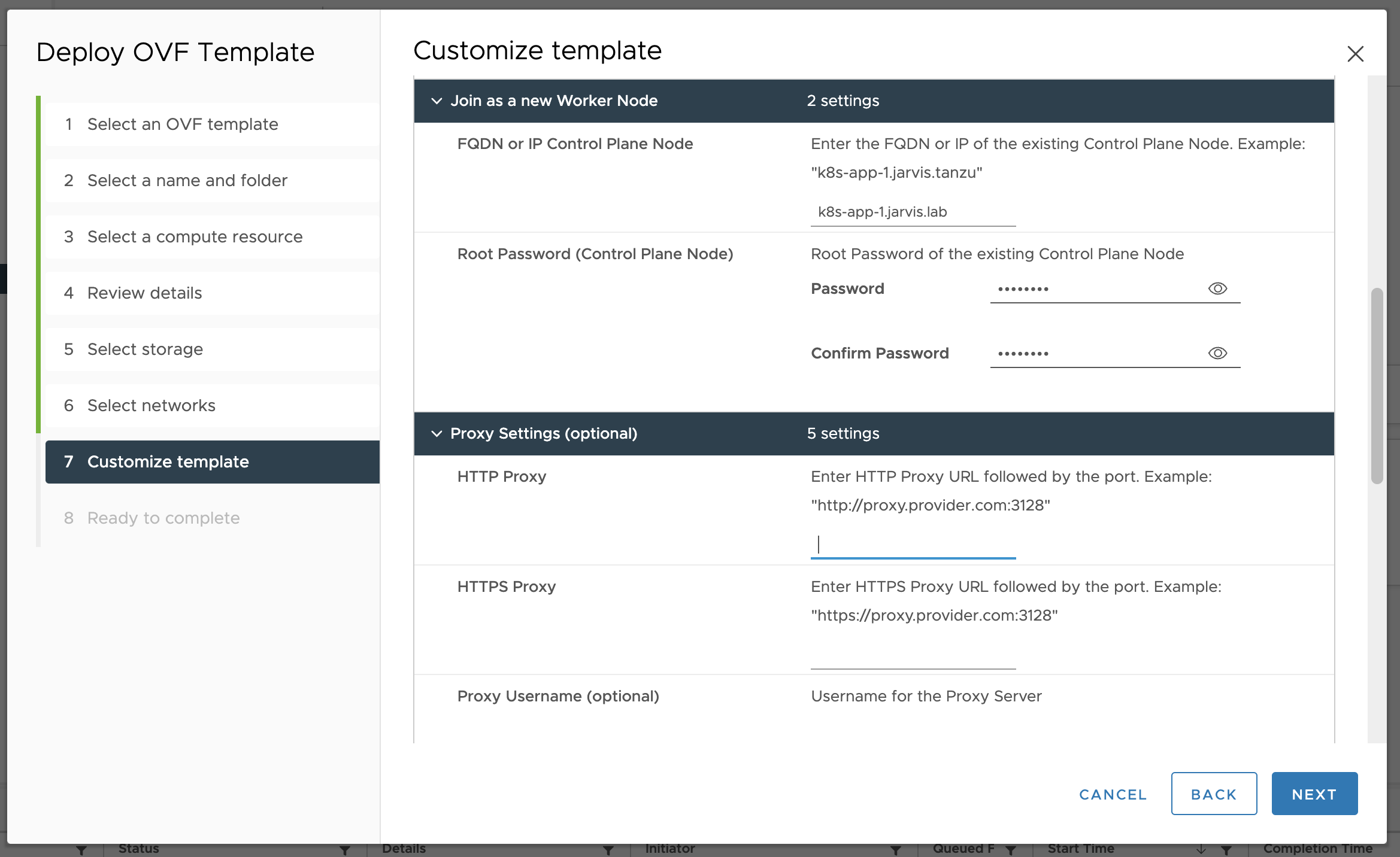

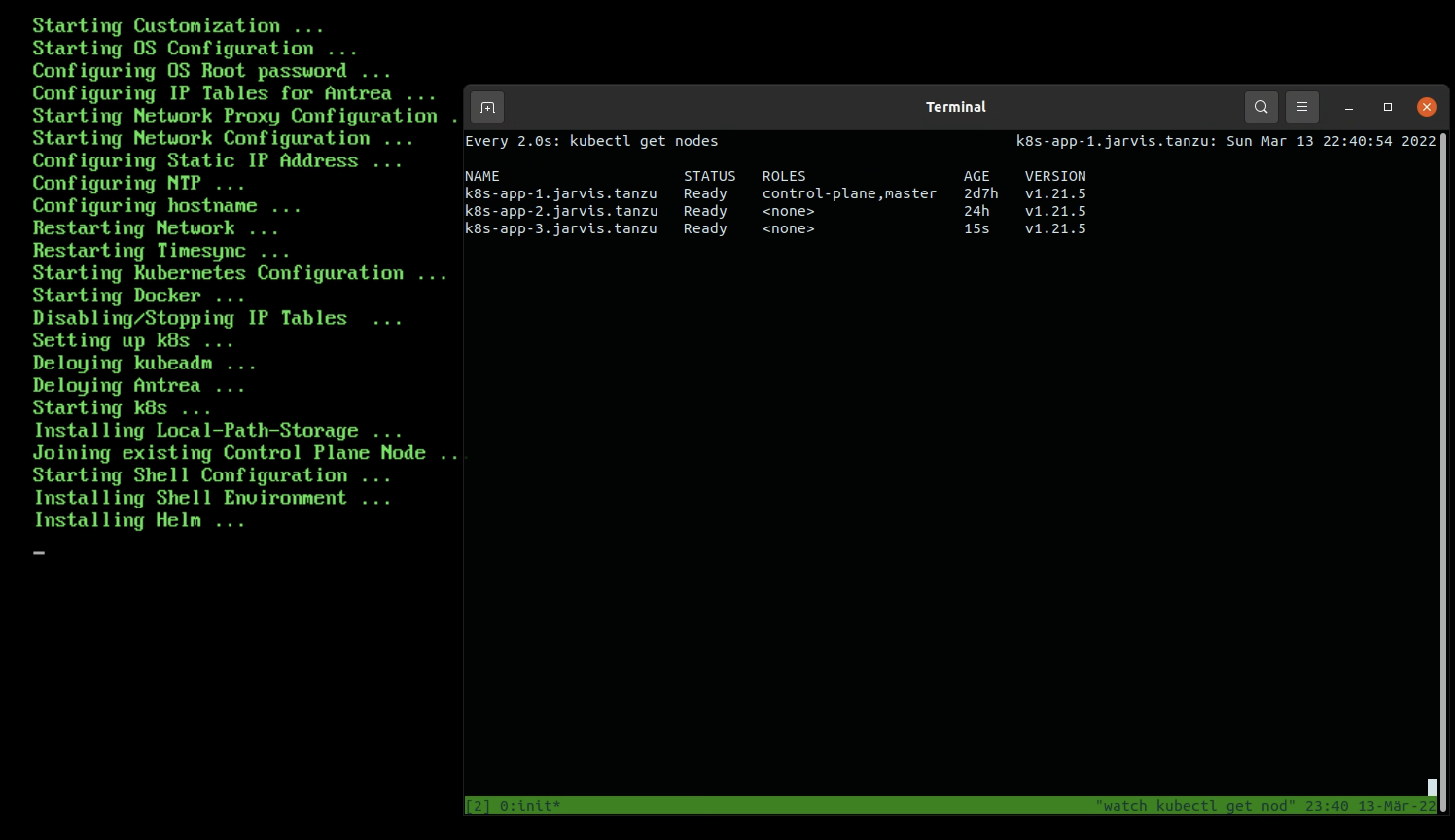

Feature: Join an existing K8s-Appliance

Since the Kubernetes part of the appliance is installed using kubeadm, I thought making an option available to join an existing K8s-Appliance node while running through the OVA deployment sections, could be a cool feature.

Details: setup-04-kubernetes.sh

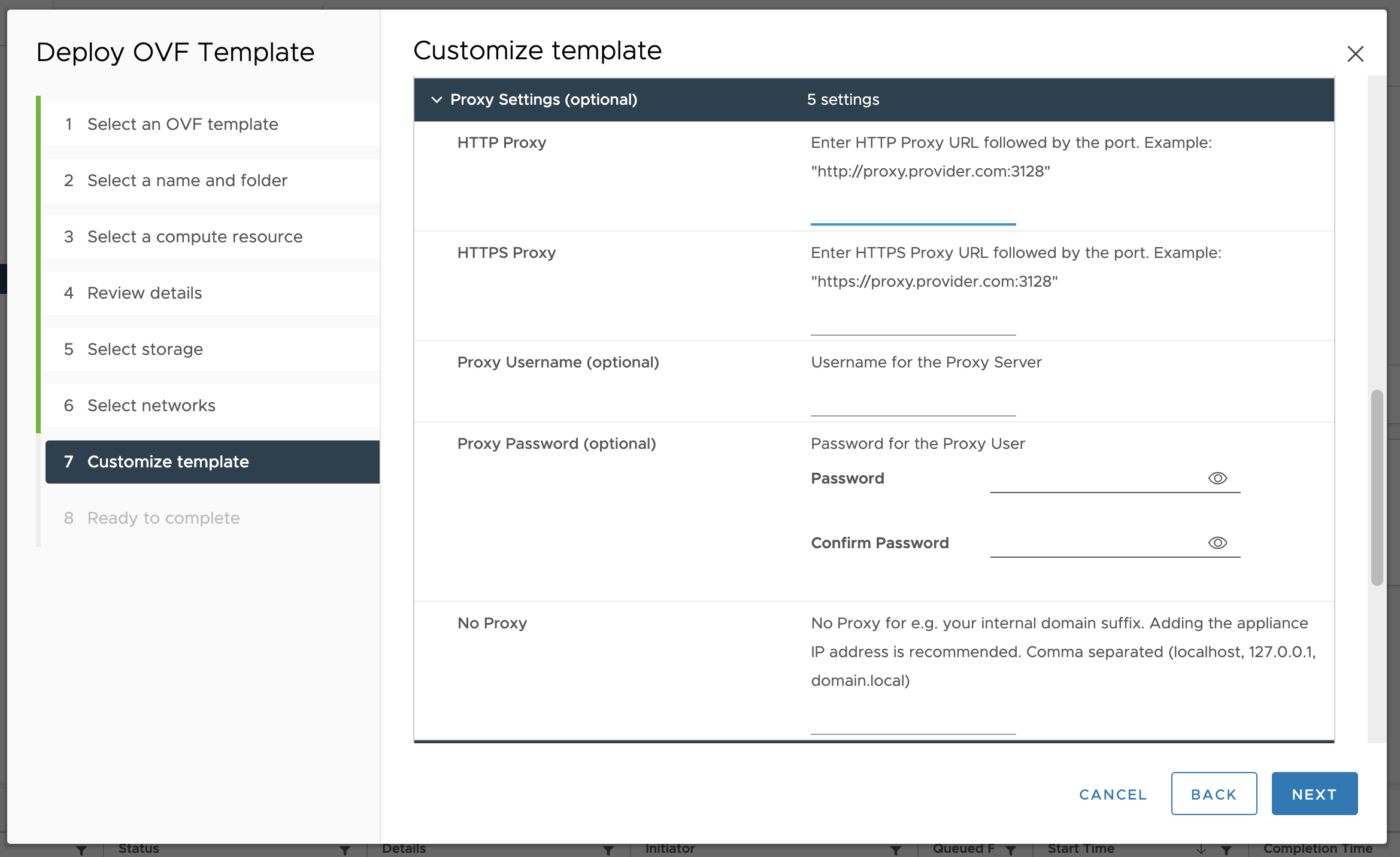

- Proxy Configuration

The appliance deployment supports the configuration of a Proxy. The configuration applies on the OS level as well as for Docker.

See here: setup-02-proxy.sh

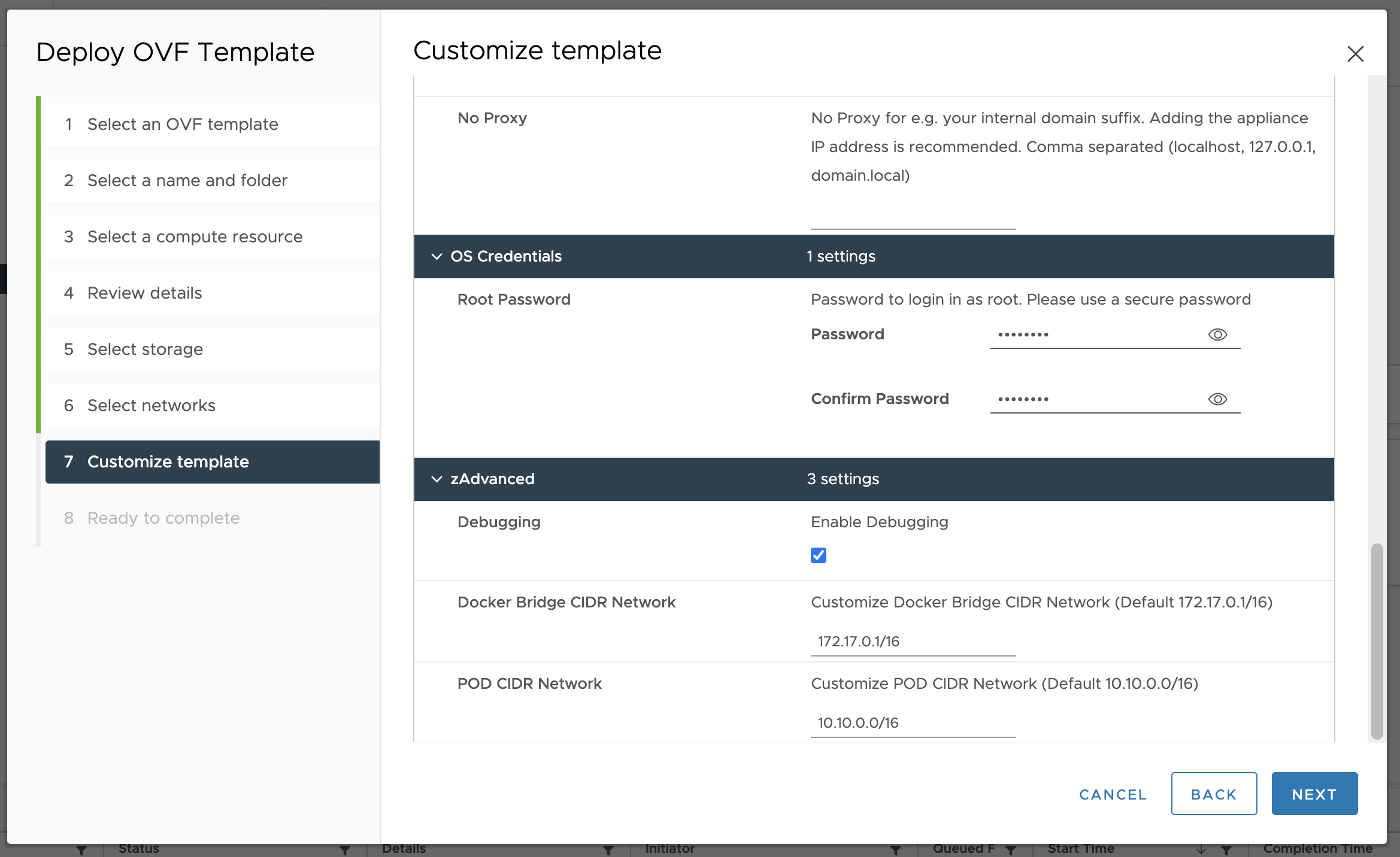

- OS Credentials and Advanced

Specify the password for the root user as well as choose if you want to enable the debugging (/var/log/bootstrap-debug.log) log.

Finally, if necessary adjust the CIDR’s for Docker Brigdge Networking and/or for the Kubernetes PODs.

That’s it. I hope the appliance will serve you in the way I thought it could do 😄

This is pure gold, will give it a try for sure shortly

— Jorge de la Cruz (@jorgedlcruz) March 10, 2022