Rebuilding from the Break - Restoring the VMware Avi Controller

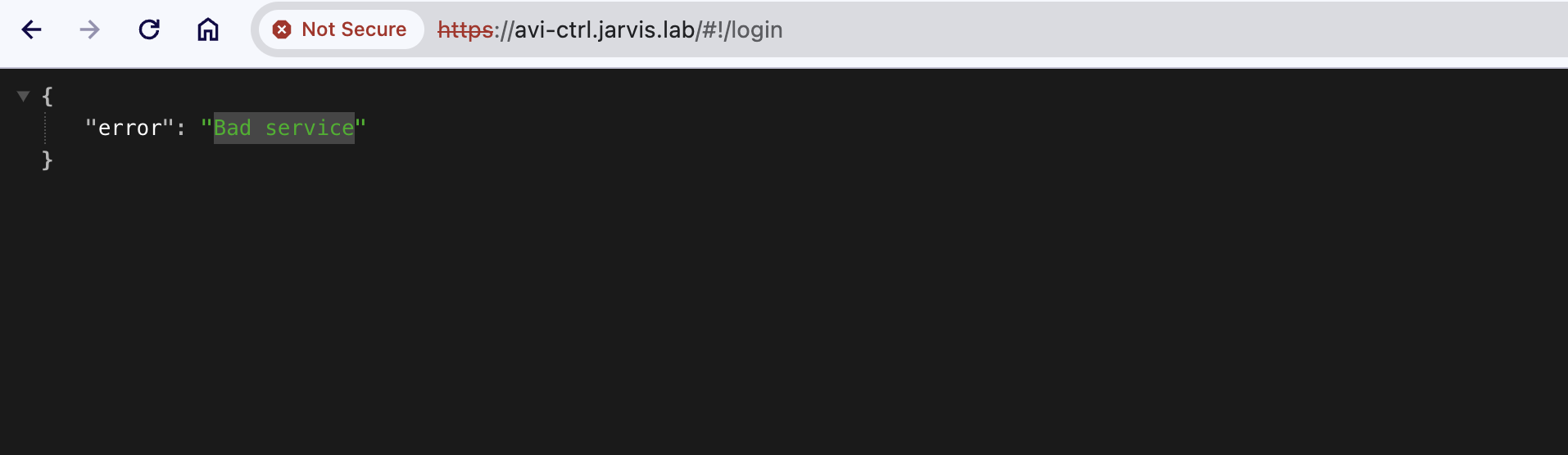

Avi Portal - Bad Service

Oh my! I wasn’t expecting a three-part series as an outcome of my recent homelab crash, but here we are 😄 Check out my recent posts Database Resurrection - Reviving vPostgres DB on VMware vCenter Server and Fixing vCenter Postgres Archiver Service - Dead Postgres Replication Slot on my made experiences with recovering the vCenter Server database.

Actually, I thought I was “out of the woods” with fixiing the broken but unfortunately my Avi Controller was also affected from the outage.

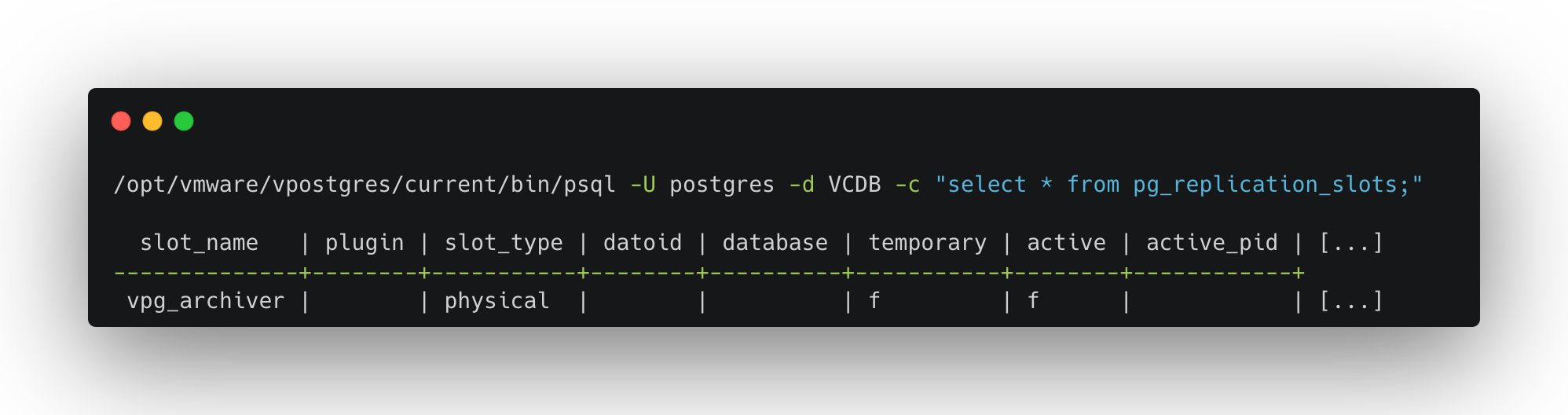

Fixing vCenter Postgres Archiver Service - Dead Postgres Replication Slot

Of course I run Backups 🤥

This time I had luck with the outcome of my recent homelab crash. If I weren’t able to fix my broken vCenter Server, as described in my previous article, I would have had to reinstall my vSphere (+ vSAN, + Tanzu) environment basically from scratch again.

Is this actually true?

Actually NO! Because if I would have configured my vSphere environment correctly, vCenter Server file-based backup were configured properly and I wouldn’t have had to worry about the consequences in the end.

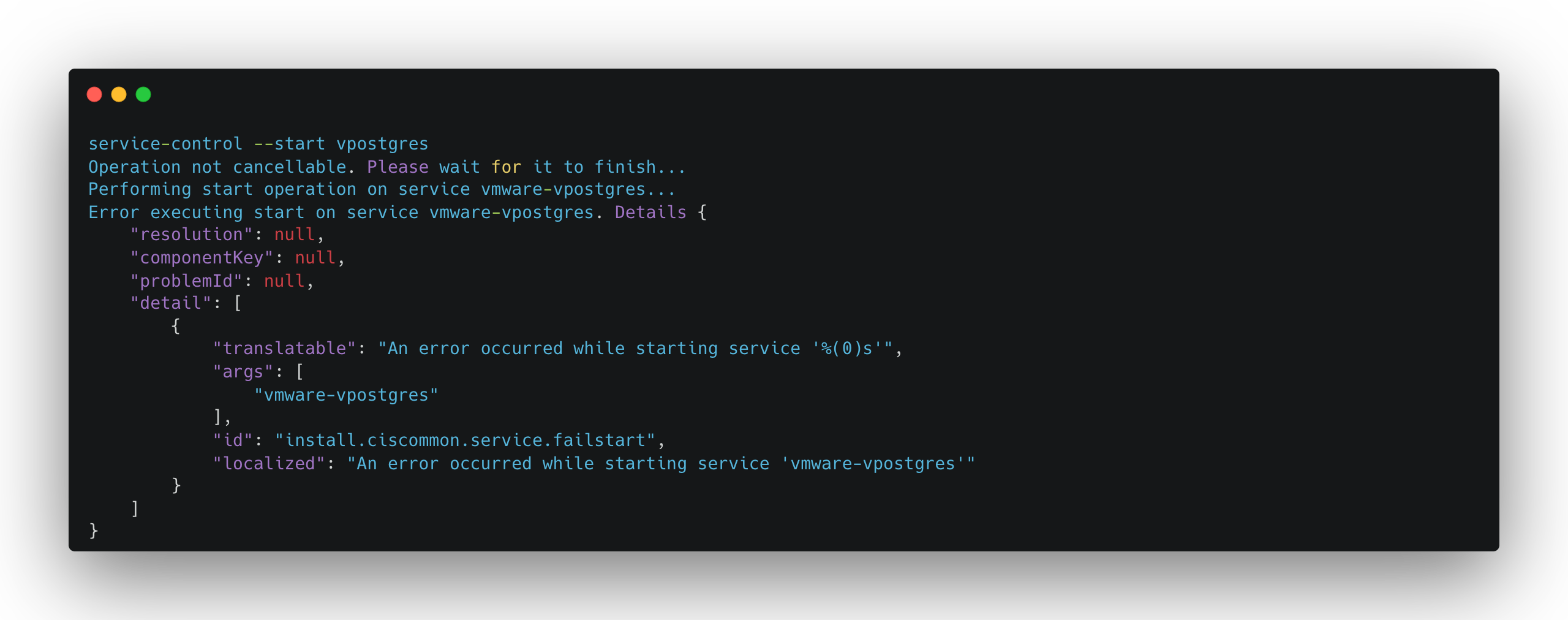

Database Resurrection - Reviving vPostgres DB on VMware vCenter Server

Power Failure causes Problems again

Once in a while power failures happen and can (mostly will!) cause troubles for small homelabs like mine. I’m running a two-node vSAN cluster on two Supermicro SYS-E200-8D servers. My vSAN Witness Appliance is running on a small Intel NUC, which is perfectly suited for this use case. The vCenter Server is still running on the vSAN cluster but only compute-wise. I have a Synology NAS running as well which is providing an additional NFS datastore in order to have at least the VCSA (VM) storage outsourced from the cluster.

Elevate your Cloud-Native Journey: Knative the VMware Tanzu Way Part I - Streamlined Installation of Knative

Knative 💙

In the rapidly evolving landscape of modern software architecture, event-driven systems have emerged as a pivotal paradigm, empowering organizations to create highly responsive, scalable, and adaptable applications. Knative stands at the forefront of this revolution, offering a robust and flexible framework for building event-driven architectures (EDA) that seamlessly integrate diverse components, enhance automation, and enable real-time data processing.

I’m evangelizing and supporting the Knative project for a longer time already. I recently had the pleasure to demonstrate parts of its comprehensive feature set at the great ContainerDays event in beautiful Hamburg, Germany.

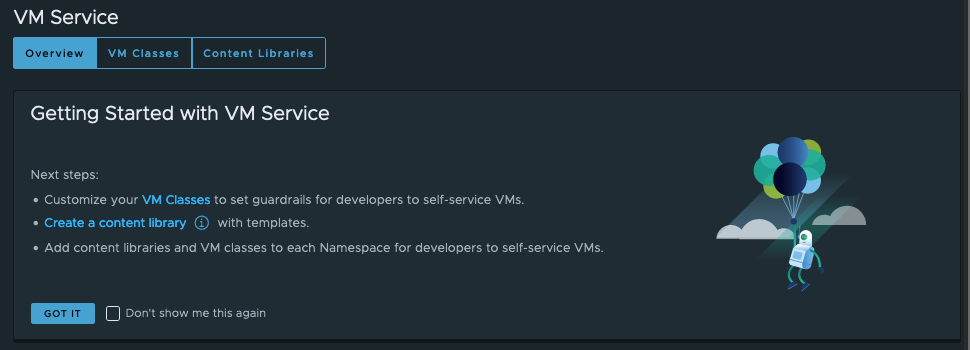

vSphere with Tanzu Supervisor Services Part IV - Virtual Machine Service to support Hybrid Application Architectures

Hybrid Application Architectures

As technology advances at a rapid pace, the landscape of application development continues to evolve. The demand for agility, scalability, and cost-effectiveness has given rise to a new breed of architectures that seamlessly integrate modern cloud-native principles with established traditional workloads. One such paradigm that has gained significant traction is the hybrid application architecture, which combines the power of service-oriented architectures (SOA) with the reliability and versatility of virtual machines (VMs).